Code Profiling: Monitoring and Speeding up your code

By Julia Piaskowski

Sources

Thomas Lumley, Github repo useRfasteR Hadley Wickham, Profiling, Advanced R Dirk Eddelbuettel, Rcpp

The Process for Improving Code:

(quote from Advanced R)

Find the biggest bottleneck (the slowest part of your code).

Try to eliminate it (you may not succeed but that's ok).

Repeat until your code is fast enough.

Easy peasy, right???

Some general guidelines for speeding up R code

-

Use data frames less - they are expensive to create, often copied in whole when modified, and their rownames attribute can really slow things down.

-

Be wary of using functions that copy objects in whole: c(), append(), cbind(), rbind(), or paste(). When used in loops, you can get a massive proliferation of objects in memory.

-

Use vectorised functions:

* apply, lapply, sapply, vapply, tapply, mapply

* rowSums, colSums, rowMeans, colMeans, cumsum, diff

-

Base functions are designed to handle wildly different input. Consider rewriting base functions for highly repetitive tasks.

-

Use parallel::mclapply for parallelising functions.

-

Consider an optimized matrix algebra library (beyond BLAS) for better performance (e.g. Apple vecLib BLAS, openBLAS).

-

If you work with sparse matrices, use tools for them like the package 'Matrix'.

-

For huge objects, consider storing the information in a database and accessing it with 'dbplyr'. The packages 'dbglm' and 'tidypredict' will also do model fitting with data inside a database.

-

Another solution for large objects are specialized formats like HDF5 or netCDF.

-

Take advantage of the Rcpp suite of programs - not just for C/C++ programmers (e.g. RcppArmadillo::fastlm).

-

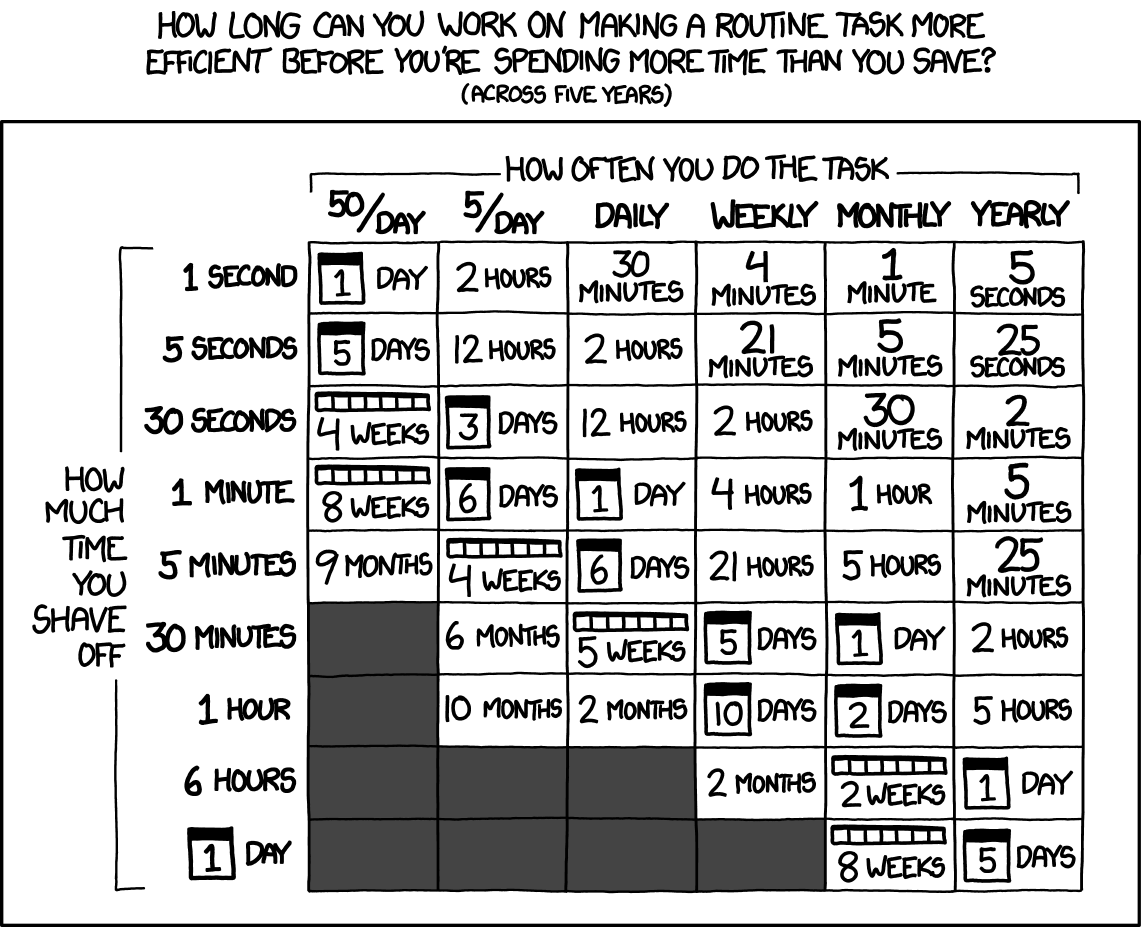

Check your code with benchmarking!!!

Let's do some benchmarking!

Important: don't (re)install 'compiler'; you should just be able to load it in R v3.5 and later.

1pck <- c("pryr","microbenchmark", "profvis", "compiler", "mnormt")

2invisible(lapply(pck, library, character.only = T))

3

4## Warning: package 'pryr' was built under R version 3.5.1

5

6##

7## Attaching package: 'pryr'

8

9## The following object is masked from 'package:data.table':

10##

11## address

12

13## Warning: package 'microbenchmark' was built under R version 3.5.1

14

15## Warning: package 'profvis' was built under R version 3.5.1

First, Turn off the just-in-time compiler. Note that return value is what the JIT was set at previously (default = 3).

The microbenchmark function

- for evaluating small snippets of code

- below is a comparison of several approaches to calculating a mean

1a <- function() {

2 m <- sample(1:100, 2)

3 data.x <- lapply(m, function(x) rnorm(1e4, mean = x))

4 do.call("cbind", data.x)

5}

6

7some_data <- a()

8dim(some_data)

9

10## [1] 10000 2

11

12microbenchmark(

13 mean_loop = apply(some_data, 2, mean),

14 mean_vec = colMeans(some_data),

15 mean_manual = apply(some_data, 2, function(x) sum(x)/length(x)),

16 mean_manual_ultra = apply(some_data, 2, function(x){

17 total = 0

18 n = 0

19 i = 1

20 while(!is.na(x[i])) {

21 total = total + x[i]

22 n = n+1

23 i = i+1

24 }

25 total/n

26 })

27)

28

29## Unit: microseconds

30## expr min lq mean median uq

31## mean_loop 161.333 178.4915 211.83635 196.1325 234.3010

32## mean_vec 21.491 24.5375 33.40574 30.6315 39.7725

33## mean_manual 135.673 148.0220 181.27308 169.9925 203.6700

34## mean_manual_ultra 26512.007 26947.7305 28382.64290 27481.9230 28278.1590

35## max neval

36## 388.737 100

37## 118.353 100

38## 324.268 100

39## 39815.319 100

Prevent multiple dispatch:

- the function mean() is meant to handle several different types of data

- specifying the method (thus implying a certain type of input) can speed up the process for small data sets

1# the function mean() calls a different function depending on the object specified

2methods(mean)

3

4## [1] mean.Date mean.default mean.difftime mean.IDate* mean.POSIXct

5## [6] mean.POSIXlt

6## see '?methods' for accessing help and source code

7

8x1 <- list(e2 = runif(1e2), e4 = runif(1e4), e6 = runif(1e6))

9

10lapply(x1, function(x)

11 microbenchmark(

12 mean(x),

13 mean.default(x)

14 )

15)

16

17## $e2

18## Unit: nanoseconds

19## expr min lq mean median uq max neval

20## mean(x) 3528 3529 4189.61 3849 3850 23735 100

21## mean.default(x) 642 963 1322.17 963 1283 15076 100

22##

23## $e4

24## Unit: microseconds

25## expr min lq mean median uq max neval

26## mean(x) 21.169 21.491 23.26722 21.811 22.774 50.036 100

27## mean.default(x) 18.282 18.603 19.61392 18.604 19.566 38.489 100

28##

29## $e6

30## Unit: milliseconds

31## expr min lq mean median uq max

32## mean(x) 1.858365 1.901665 1.934968 1.923636 1.955228 2.134843

33## mean.default(x) 1.842008 1.880336 1.911076 1.905353 1.938871 2.048243

34## neval

35## 100

36## 100

37

38# I suspect the improvement in speed for smaller objects but larger objects is related to big O notation -- these smaller objects are impacted by constants

39

40# tracking package type, etc

41otype(mean)

42

43## [1] "base"

44

45ftype(mean)

46

47## [1] "s3" "generic"

48

49showMethods(mean) #for S4

50

51##

52## Function "mean":

53## <not an S4 generic function>

54

55methods(mean)

56

57## [1] mean.Date mean.default mean.difftime mean.IDate* mean.POSIXct

58## [6] mean.POSIXlt

59## see '?methods' for accessing help and source code

60

61#this doesn't always work:

62methods(var)

63

64## Warning in .S3methods(generic.function, class, parent.frame()): function

65## 'var' appears not to be S3 generic; found functions that look like S3

66## methods

67

68## [1] var.test var.test.default* var.test.formula*

69## see '?methods' for accessing help and source code

70

71getAnywhere(var)

72

73## A single object matching 'var' was found

74## It was found in the following places

75## package:stats

76## namespace:stats

77## with value

78##

79## function (x, y = NULL, na.rm = FALSE, use)

80## {

81## if (missing(use))

82## use <- if (na.rm)

83## "na.or.complete"

84## else "everything"

85## na.method <- pmatch(use, c("all.obs", "complete.obs", "pairwise.complete.obs",

86## "everything", "na.or.complete"))

87## if (is.na(na.method))

88## stop("invalid 'use' argument")

89## if (is.data.frame(x))

90## x <- as.matrix(x)

91## else stopifnot(is.atomic(x))

92## if (is.data.frame(y))

93## y <- as.matrix(y)

94## else stopifnot(is.atomic(y))

95## .Call(C_cov, x, y, na.method, FALSE)

96## }

97## <bytecode: 0x00000000117ce320>

98## <environment: namespace:stats>

Find the bottlenecks with Rprof()

- writes stack calls to disk along with memory usage and vector duplication

- you create a .prof file to do this and then close it when done with profiling

1Rprof("permute.prof", memory.profiling = T)

2

3sigma.mv <- diag(1, nrow = 5, ncol = 5)

4sigma.mv[upper.tri(sigma.mv)] = 0.5

5sigma.mv[lower.tri(sigma.mv)] = 0.5

6

7mvn.data <- rmnorm(1e3, mean = rep(0, 5), varcov = sigma.mv)

8colnames(mvn.data) <- c(paste0("x",1:5))

9

10kmeans.Ftest <- function(kmean_obj) {

11 df.1 = length(kmean_obj$size) - 1

12 df.2 = length(kmean_obj$cluster) - length(kmean_obj$size)

13 betw_ms <- kmean_obj$tot.withinss/df.1

14 with_ms <- kmean_obj$betweenss/df.2

15 fratio = betw_ms/with_ms

16 pval <- pf(fratio, df1 = df.2, df2 = df.1, lower.tail = F)

17 stuff = c(fratio, df.1, df.2, pval)

18 names(stuff) <- c('F-ratio', 'df 1','df 2', 'p-value')

19 return(stuff)

20}

21

22kmeans.optimiz <- lapply(2:10, function(x) {

23 results = kmeans(mvn.data, centers = x, nstart = 15, algorithm = "MacQueen",

24 iter.max = 50)

25 kmeans.Ftest(results)

26})

27

28kmeans.final <- do.call("rbind", kmeans.optimiz)

29

30Rprof(NULL)

31summaryRprof("permute.prof") #, memory = "both")

32

33## $by.self

34## self.time self.pct total.time total.pct

35## ".C" 0.10 71.43 0.10 71.43

36## "apply" 0.02 14.29 0.02 14.29

37## "as.list" 0.02 14.29 0.02 14.29

38##

39## $by.total

40## total.time total.pct self.time self.pct

41## "block_exec" 0.14 100.00 0.00 0.00

42## "call_block" 0.14 100.00 0.00 0.00

43## "doTryCatch" 0.14 100.00 0.00 0.00

44## "eval" 0.14 100.00 0.00 0.00

45## "evaluate" 0.14 100.00 0.00 0.00

46## "evaluate::evaluate" 0.14 100.00 0.00 0.00

47## "evaluate_call" 0.14 100.00 0.00 0.00

48## "FUN" 0.14 100.00 0.00 0.00

49## "handle" 0.14 100.00 0.00 0.00

50## "in_dir" 0.14 100.00 0.00 0.00

51## "kmeans" 0.14 100.00 0.00 0.00

52## "knit" 0.14 100.00 0.00 0.00

53## "knit2wp" 0.14 100.00 0.00 0.00

54## "lapply" 0.14 100.00 0.00 0.00

55## "process_file" 0.14 100.00 0.00 0.00

56## "process_group" 0.14 100.00 0.00 0.00

57## "process_group.block" 0.14 100.00 0.00 0.00

58## "source" 0.14 100.00 0.00 0.00

59## "timing_fn" 0.14 100.00 0.00 0.00

60## "try" 0.14 100.00 0.00 0.00

61## "tryCatch" 0.14 100.00 0.00 0.00

62## "tryCatchList" 0.14 100.00 0.00 0.00

63## "tryCatchOne" 0.14 100.00 0.00 0.00

64## "withCallingHandlers" 0.14 100.00 0.00 0.00

65## "withVisible" 0.14 100.00 0.00 0.00

66## ".C" 0.10 71.43 0.10 71.43

67## "do_one" 0.10 71.43 0.00 0.00

68## "unique" 0.04 28.57 0.00 0.00

69## "unique.matrix" 0.04 28.57 0.00 0.00

70## "apply" 0.02 14.29 0.02 14.29

71## "as.list" 0.02 14.29 0.02 14.29

72## "alist" 0.02 14.29 0.00 0.00

73##

74## $sample.interval

75## [1] 0.02

76##

77## $sampling.time

78## [1] 0.14

Use profvis to visualize performance

- nice graphical output

- native in RStudio (lots of documentation)

1p <- profvis({

2 mean_loop = apply(some_data, 2, mean)

3 mean_vec = colMeans(some_data)

4 mean_manual = apply(some_data, 2, function(x) sum(x)/length(x))

5 mean_manual_ultra = apply(some_data, 2, function(x){

6 total = 0

7 n = 0

8 i = 1

9 while(!is.na(x[i])) {

10 total = total + x[i]

11 n = n+1

12 i = i+1

13 }

14 total/n

15 })

16})

17htmlwidgets::saveWidget(p, "profile.html")

18

19# Can open in browser from R

20browseURL("profile.html")

Explore source code

How to access function source code (if you didn't write the function yourself)

- Type the function name (without parentheses):

eigen - Find namespace and methods associated:

methods("princomp"); getAnywhere("princomp.default") - Use the package pryr to search for C code on GitHub

- Download the entire package and explore the code

1svd

2La.svd

3

4# routes us to search on GitHub (which may or may not be helpful)

5try(show_c_source(.Internal(La_svd(x))))

6show_c_source(.Internal(mean(x)))

7

8# download.packages("broman", repos = "https://cloud.r-project.org", destdir = getwd(), type = "source")

Trace objects copied

- use tracemem() to track particular objects

1a <- letters[sample(10)]

2tracemem(a)

3

4## [1] "<0000000030B1AD78>"

5

6a[1] <- "Z"

7

8## tracemem[0x0000000030b1ad78 -> 0x0000000030b1b508]: eval eval withVisible withCallingHandlers doTryCatch tryCatchOne tryCatchList tryCatch try handle timing_fn evaluate_call <Anonymous> evaluate in_dir block_exec call_block process_group.block process_group withCallingHandlers process_file knit knit2wp eval eval withVisible source

9

10b <- a[1:5] # not copied

Memory

- How much memory is being used? Note that R requests memory from your computer in big chunks then manages it itself.

1mem_used()

2

3## 285 MB

4

5object.size(x1) #base

6

7## 8081384 bytes

8

9object_size(x1) #pryr

10

11## 8.08 MB

12

13compare_size(x1) #between base R and pryr

14

15## base pryr

16## 8081384 8081384

Read the documentation for pryr functions for more useful functions.

Now that we are all done, turn the JIT compiler back on:

More Tips from Advanced R

These are designed to reduce internal checks

- read.csv(): specify known column types with colClasses.

- factor(): specify known levels with levels.

- cut(): don't generate labels with labels = FALSE if you don't need them, or, even better, use findInterval().

- unlist(x, use.names = FALSE) is much faster than unlist(x).

- interaction(): if you only need combinations that exist in the data, use drop = TRUE.

Remember!

Top image from HackerImage website.